G'day All,

Sorry, this has grown.

Summary: Explain how work we've (not just me) been doing echoes much of what Derek said. Outline a problem facing Moodle and other large scale (multiple courses/contexts) learning analytics projects. Suggest a possible (difficult) solution.

The 4 paths

Colin Beer,

Damien Clark, and I have been thinking about this sort of problem for a while.

Michael referenced some of this work earlier in this thread with what we know call the 4 paths for learning analytics. It was 3 paths in a

2014 paper but had evolved into 4 paths by the time of

a talk at MoodleMootAU'2015.

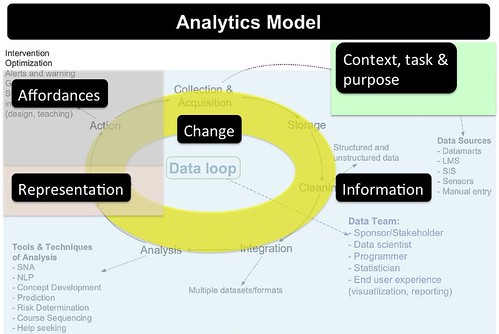

The paths are based on our observations of ways in which learning analytics are implemented. The 4 paths are:

- Do it to teachers.

- Do it for teachers.

- Do it with teachers.

- Teacher DIY

"Know thy students is an example of Teacher DIY from my practice.

Learning analytics projects in institutions tends to be

to or

for. Large scale institutional projects that assume a particular tool is applicable across all courses.

The problem of context

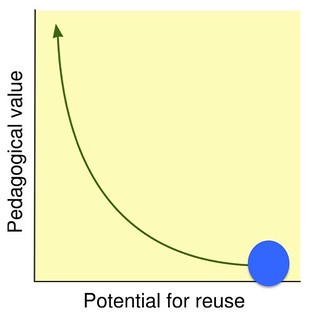

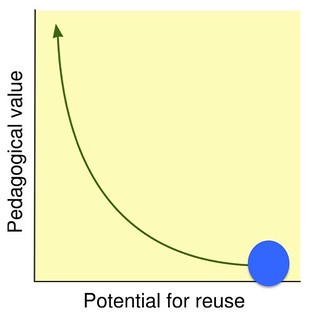

The problem with this is that the projects have to be designed for reuse across the broadest possible range of courses. As per Wiley's

reusability paradox this means that contextual details (e.g. specific details of the individual learners, the learning design, or information specific to the course) have to be removed. The cost of removing context is reducing pedagogical value.

The do it

to and

for paths have a tendency to remove context and reduce pedagogical value.

This might be why we get Dawson & McWilliam (2008) reporting

current LMS present poor data aggregation and similarly poor visualisation tools in terms of assisting staff in understanding..student learning behaviour (p. 3)

and then 5 years later Corrin et al (2013) reporting

A common request that emerged across the focus groups was the ability to correlate data across systems

Since the context for most courses is that Moodle is not the only system containing relevant data.

Categorising Moodle analytics tools with the 4 paths?

Earlier in the thread Michael asked

Could there be a way of categorising tools according to the three paths you suggested in your ASCILITE 2014 paper (do it to, do it for, do it with teachers)?

The problem or bias (one that perhaps needs to be disrupted) I bring to this question is that most of the Moodle tools (almost be definition) have been the result of the

to or

for paths. Due to the nature of how these tools are developed and then evolve.

There's also the problem of how plugins get added to an institutional Moodle installation. There are typically institutional gatekeepers that evaluate what gets added. Which tends to make the

DIY approach impossible.

Of course, reality is never that simple and I'm sure there's typically a mix of the (first three) paths, but I do think there's a tendency toward

to and

forAligning learning analytics with learning design

Lockyer et al (2013) suggest that learning analytics needs to be better aligned with the specifics of the learning design and include arguments such as (emphasis added)

This leads to questions surrounding how analytics can begin to bridge the technical–educational divide to provide just-in-time, useful, and context-sensitive feedback on how well the learning design is meeting its intended educational outcomes. Here we argue that a critical step for moving forward on this agenda entails the establishment of methods for identifying and coding learning and teaching contexts. (p. 1446)

Subsequently they propose "two broad categories of analytic applications" (p. 1448)

- checkpoint analytics; and,

that is, the snapshot data that indicate a student has met the prerequisites for learning by accessing the relevant resources of the learning design. (p. 1448)

- process analytics.

These data and analyses provide direct insight into learner information processing and knowledge application (Elias, 2011) within the tasks that the student completes as part of a learning design.

The challenge is that these work best when aligned with the specific learning design.

I'm not sure that many of the existing Moodle (and elsewhere) learning analytics tools are specific to learning designs.

What might this look like?

A few weeks ago I gave

a talk to Uni of South Australia that expanded upon some of the above and closed off with a couple of examples of what this might look like in Moodle. Here's one example.

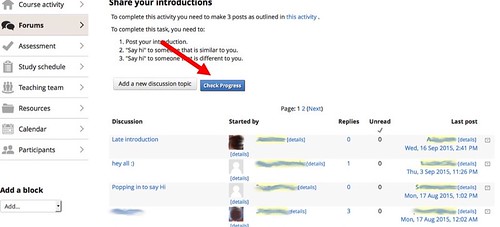

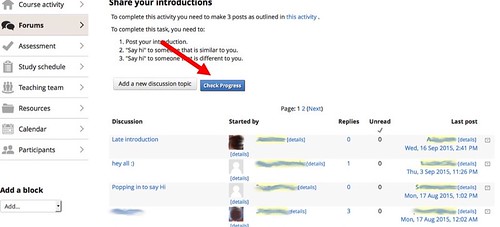

Near the start of my course I use a Moodle forum to run an

icebreaker activity. Students have to

- Post their introduction.

- Say hi to someone they think is similar to them.

- Say hi to someone they think is different to them.

i.e. they have to write a post and two replies. I use activity completion on this, but that doesn't tell me which students have written how many posts. It also doesn't scaffold the students - i.e. clearly tell them that they have 2 more posts to write.

With this learning design, a useful example of process analytics would be (as shown in the following image) a button I could press (in context) that would show me a list of students and their progress. It would be even more useful if it included a "Remind" button that would help me nudge students into completing the task

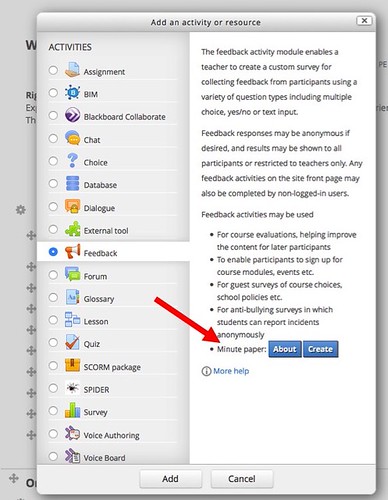

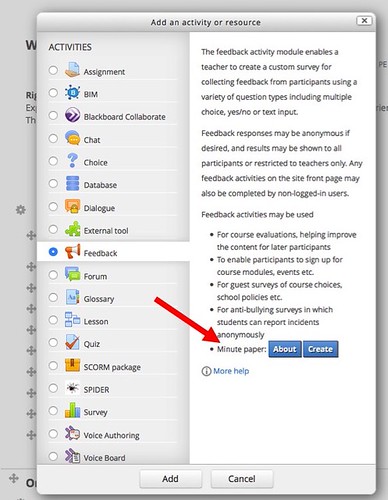

Another example, not quite related to learning analytics might be to add some scaffolding to the Feedback tool so that it would actively support people in using the Feedback tool to implement

Minute papers. Stead (2005) suggests that limited use of the Minute paper is

largely due to lack of knowledge of its existence and the perception that it would be too time-consuming to analyse responses

Sounds like a job for learning analytics, in particular process analytics.

The challenge

The challenge here is how could Moodle and institutions using it overcome the reusability paradox. Moodle has to be a tool that is usable across many different types of institutions. Hence adding the type of learning design specific examples above is difficult. But at the same time it's the sort of contextual functionality that is required to ultimately increase pedagogical value.

Due to our individual contexts, we're currently exploring solutions to this via the

with and

DIY paths. i.e. we have to work outside of Moodle. e.g. Damien's

Moodle Activity Viewer and my

"Know thy students.

I imagine that people within the Moodle HQ context would see different possibilities. It would be interesting to hear what those might be.

David.